I haven’t had much time to progress this. I am writing it up, but hard to photograph well and still in progress.

So far I have done some greyscale calibration to look at grey values v extent of polymerisation in Z. Z was chosen as it is far easier to work with than x/y.

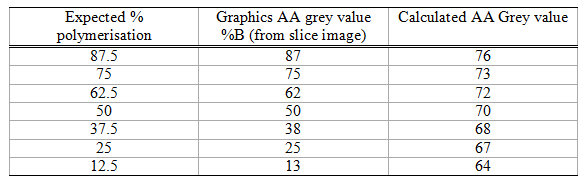

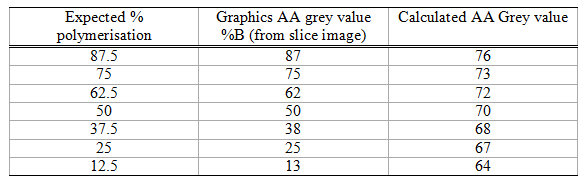

Grey values manipulated in Photoshop using %B values (where 0%B is black and 100%B is white - something of an approximation as they will be mapped onto 256 point greyscale, but good enough)

Obviously somewhat resin specfic and will depend on the exposure settings.

In my test model it was relatively easy to visually assess the endpoint greys that coincided with full (over 88% B - 224,224,224) and nil (less than 52% B - 133,133,133) effective polymerisation of the layers. Visually, there was a smooth increase in layer thickness over this range, but I do not have the means to measure this.

The key take home, therefore, is that the grey scale range currently used for AA is very different to the range that covers nil to full polymeriation.

If we assume that within the effective range the extent of polymerisation (= layer thickness) is sigmoidal then then we can map the grey level calculated by the AA algorithm to a grey level that should give the required exposure to the resin. Something like the table attached, where the calculated value gives the expected polymerisation. 50% polymerisation being 25u for a 50u layer.

Thoughts so far:

-

As expected resin behaves differently to the human eye and AA intended for visual graphics produces slice files that are not ideal for smoothing print surfaces.

-

Current AA uses an 8 level grey scale. Assuming sub pixel resolution is achievable, this would produce a theoretical 6u resolution for 50u pixels. This is probably more than needed. Even 25u would be a significant improvement in surface smoothness, and 12u would be extremely good for most purposes.

-

Chasing xy AA too far without addressing z axis AA is probably wasted effort.

-

I need to print some tests with no AA, AA as currently implemented and a manually adjusted AA according to the attached tabe to compare actual surface smoothness in the printed model for xy.