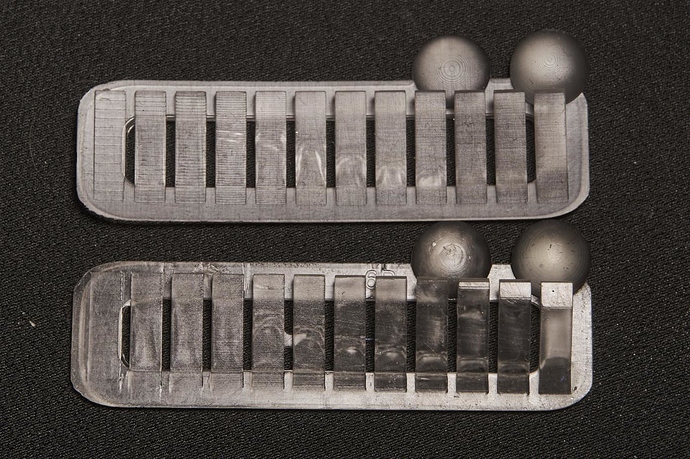

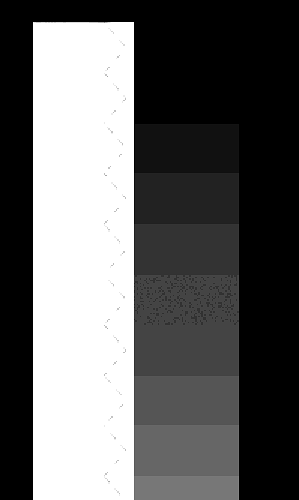

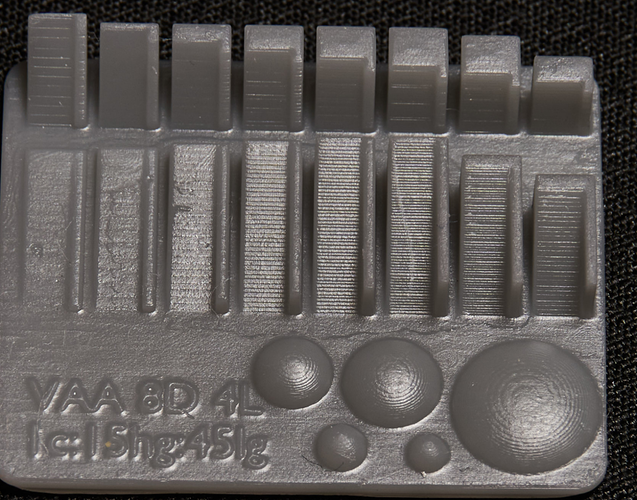

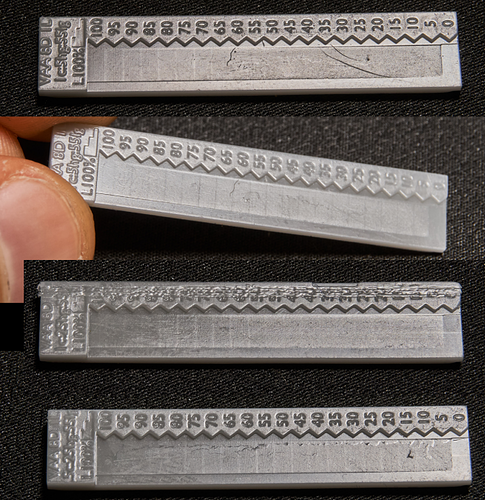

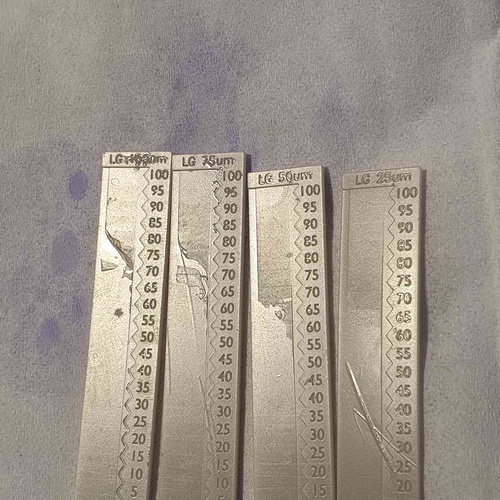

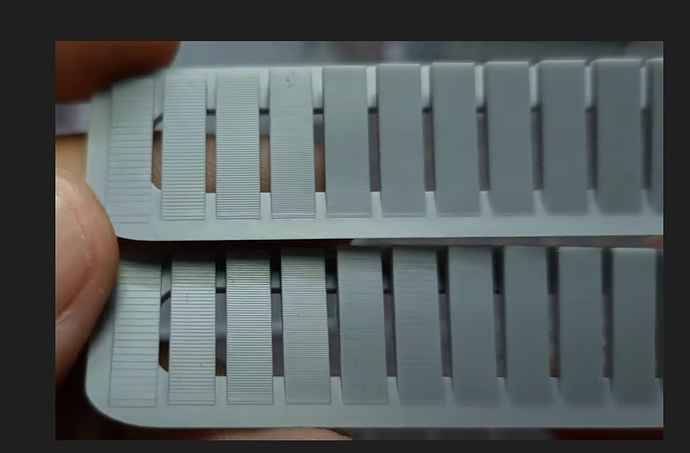

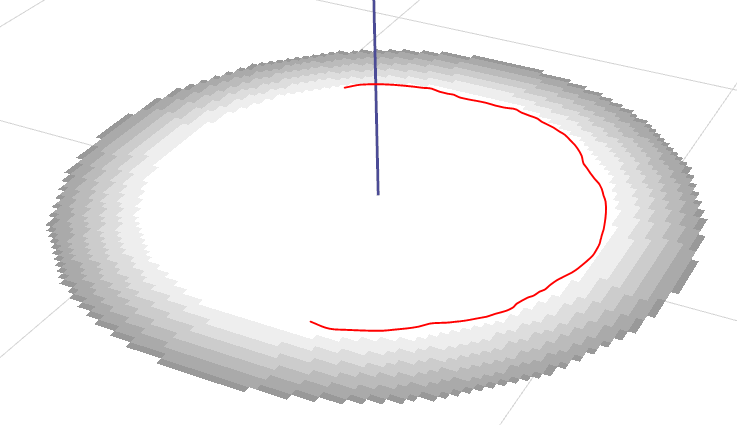

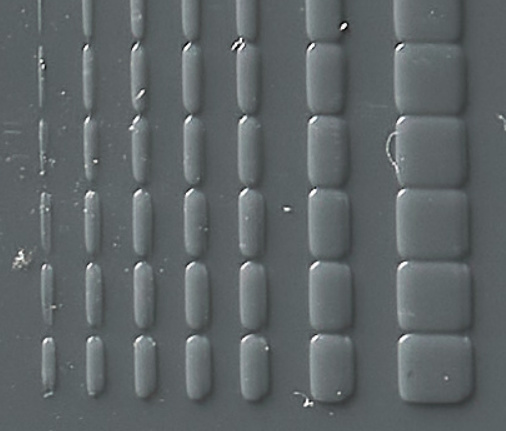

Here is a group of different linear gradient tests I did recently, I actually set up a .ctb4 file so that it did all these different layer heights on one print by printing at 50um and then getting to a certain point and lifting 25um to do the 25um gradient but blank on the other ones, then lifting another 25um to do the 50um etc. As you can see 25um with my saturn 1 was silky smooth, the last layer on that isn’t 25-30micron, it’s stupid small, however as the layer height gets higher the end gets more abrupt as the resin starts to cure from the fep up rather than plate down. Interestingly even at 100um ar like 85 there was some area where it did cure from the previous layer down, not fep first, but not for the lower intensities. However from the fact that there was even a tiny bit like that it shows that with 80+ intensity I can actually print previous layer down on my printer at 100um, the problem is that with a normal layer exposure time that intensity almost builds a full 50um layer thickness, but because we can see it did start from the previous layer at that intensity, it means the same intensity but shorter times would actually yield a thin layer at 50um. I thought that more direct light would cure plate down at thicker layer better, and while I can’t test that because I don’t have a more direct light printer, from this it’s clear that better results at thicker layers can be achieved by using a far higher intensity printer or by an idea I’ve had which I call semi continuous printing, I can’t remember if I explained this fully here, again I have a thread on discord about this, but basically the idea is to go to a certain layer height eg 50um and then cure a few very thin partial layers to build up one layer. I’ve done some tests with this and it worked but not consistently, at the time I couldn’t do 0 lift, anything under 10um would default to a normal lift height which makes multi exposures a pain, but I discovered in the G code I can just change M8070 to z0 and then 0 lift heights work so I will have to do more testing for this stuff at some point.

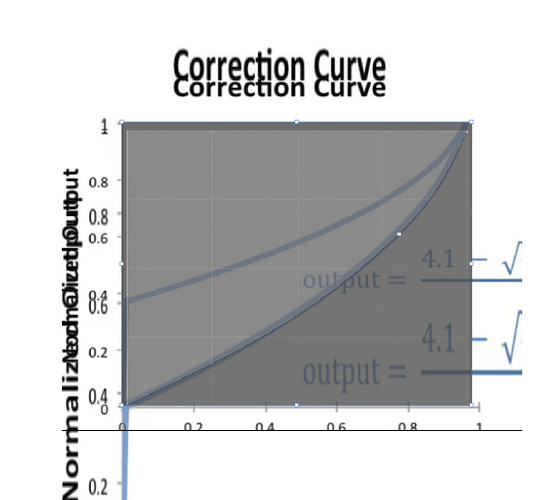

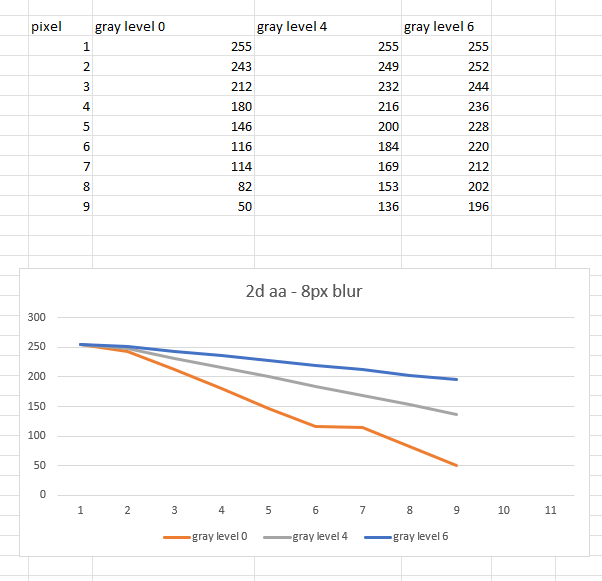

So yeha you might have abrupt issues because the layer height is too high. You also may have them because your lower grey offset is too high, I found lowering it 10-15% from the linear gradient lowest value was a good spot. And yeha the 4 bit of the anycubics will also limit you because if you are only using half the values thats like 7 values which is not much.

When I say overlapping layers I mean for each layer I sample a certian distiance for the gradient, I can just do the layer height but I find 3-4x that, resulting in wider overlapping gradients gets much smoother results, this can theoretically reduce detail but because the gradients are mapped from the surface they can actually bring out more detail, the only problem with this is it makes prints taller, but I have solved this by taking away a gradient from the downward facing faces, I haven’t tuned that fully but it’s amazing, because it can actually find details that no slicer can…

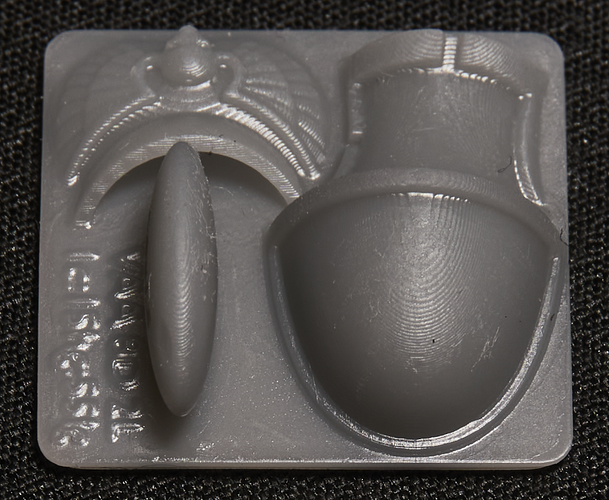

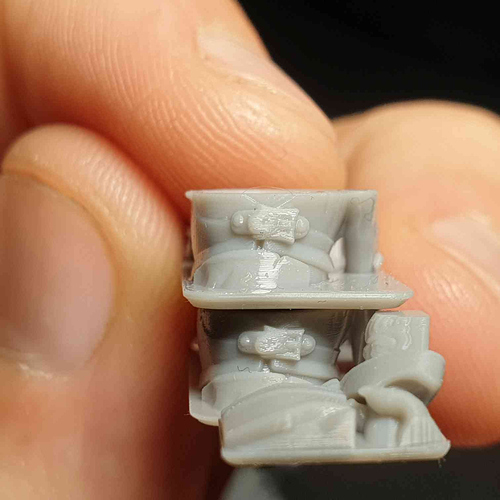

the left here is with the bottom side compensation, you can see at the left for example the trim around the coat is thicker on the z axis because it doesn’t have this compensation but it’s very thin on the left because the compensation is too strong.

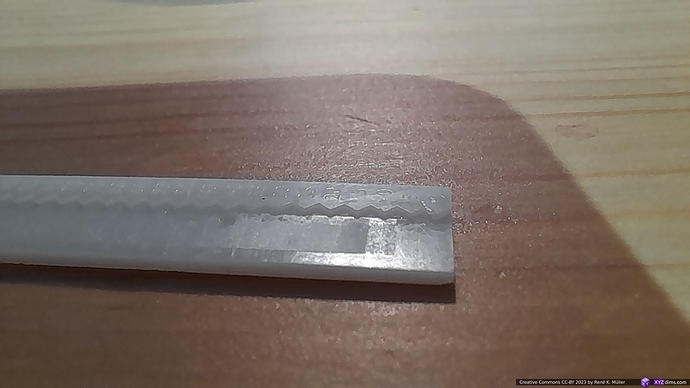

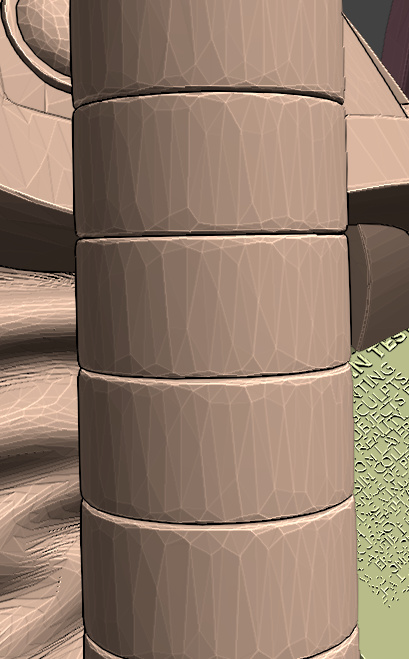

this is the staff model, the gaps between the rings are stupid small, as you can see it’s basically nothing yet the slicer was able to read them and in it’s overcompensation turn them into real gaps, if this was tuned better it could accurately make super thin vertical gaps.

here is another example, top before compensation, bottom after, as you can see the top is vertically bloated.

What anycubic machines specifically do this XRP stuff? I assume it’s just the DLP? I can’t see a normal LCD printer doing that, eg there is one that uses the same screen as my saturn and now the m5 uses the same screen as the saturn 3

"So the Saturn 1 is an lcd printer right?

Did you do any other modifications?"

not really.

“How many pixels overlap do you have between gray shades among layers?”

As I said 3-4 layers works well, it’s not so much about pixels, but when the gradients get small it gets harder because it acts more like normal AA where the layers just change in XY width rather than making thin layers. also the size of the layer/shape varies how it cures or if it cures at low values because of bloom, if your printer like mine has lots of bloom then you actually rely on that in your exposure, if you do super thin things then due to lack of bloom it’s under exposed, thats why lcd printers can’t just print single pixel features like DLP.

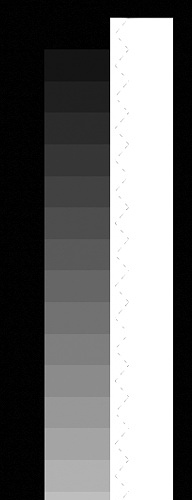

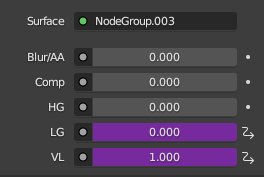

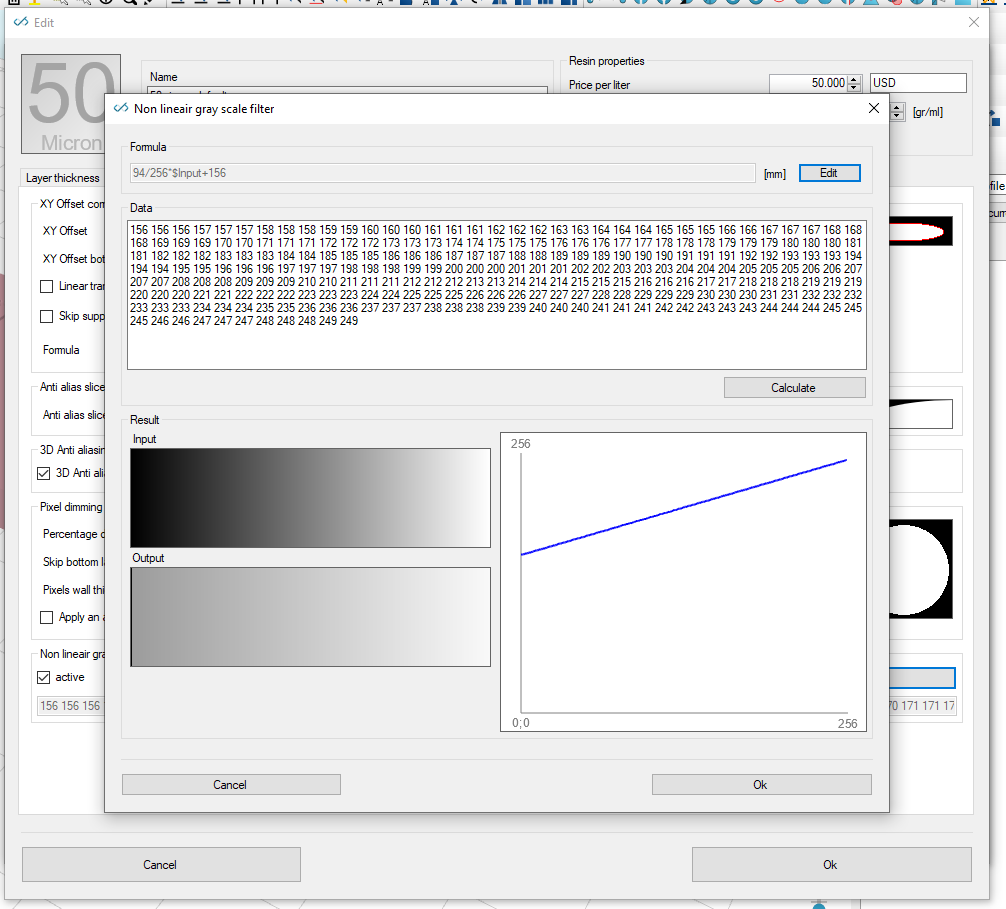

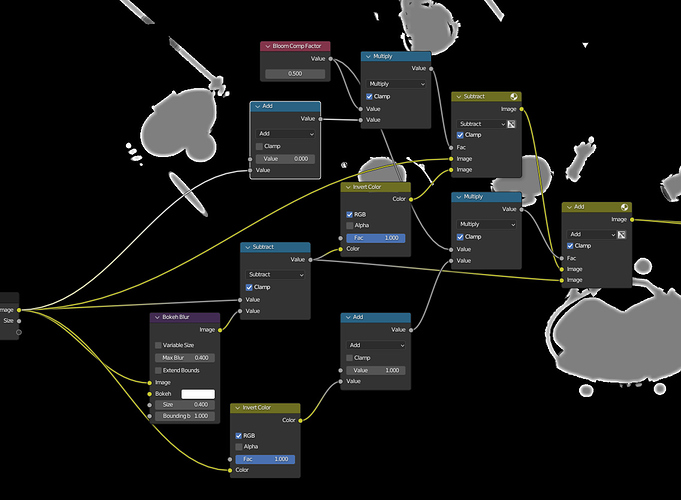

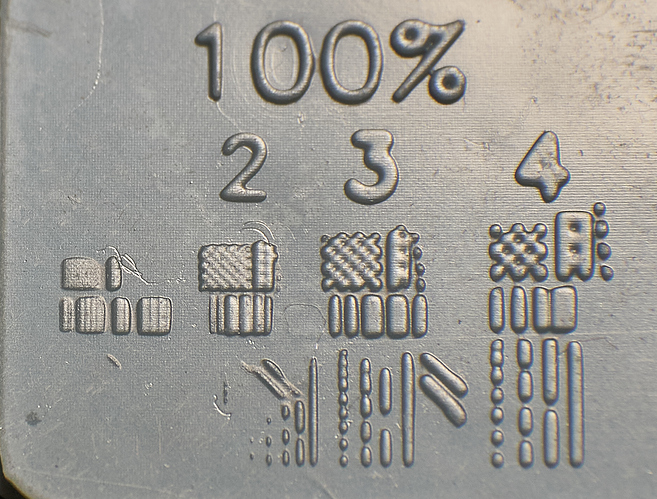

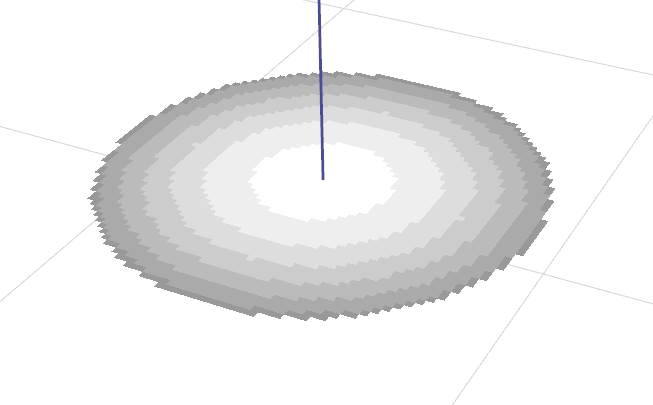

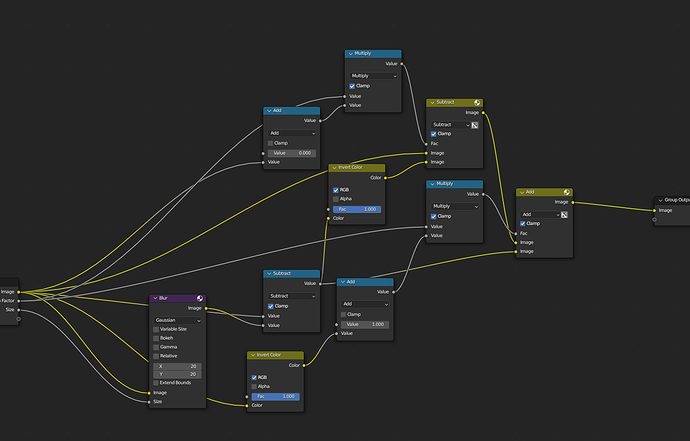

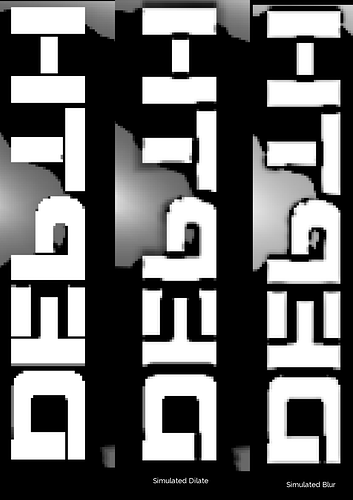

I’ve tried making some bloom compensation things like this

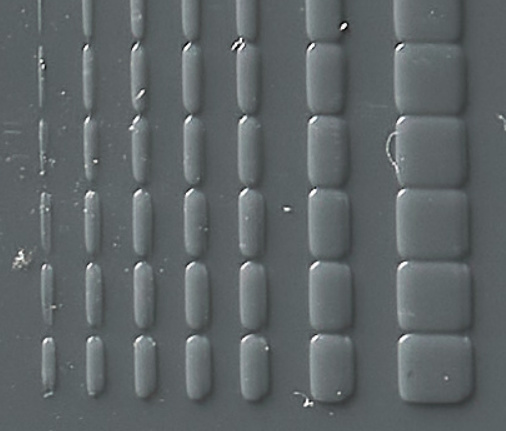

which should help with that, I think a perfect exposure is one where you expose so that you can expose a single pixel and then dim everything else to be dimensionally accurate, but as I touched on lower intensities can be an issue so there is a limit to how far that could go, at a certain point better hardware is needed, and new printers are far better than mine.

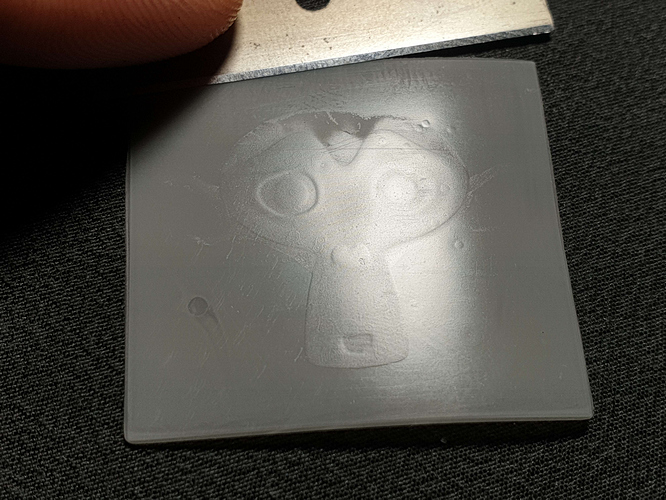

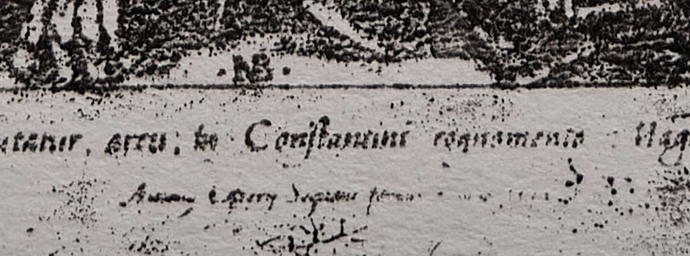

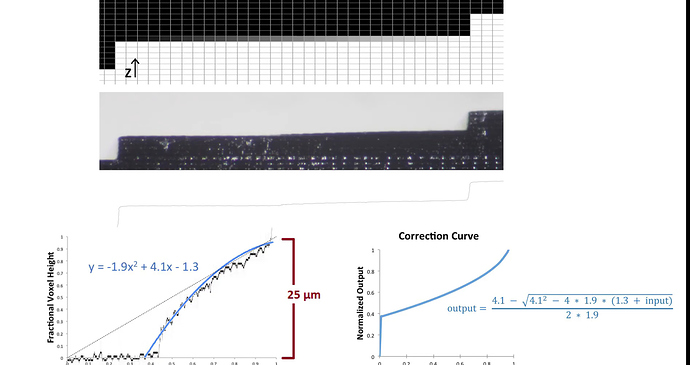

Back to printing small low intensity things, here is a test I made learning more about that and about how resin blooms<it’s not just overlapping light resin has a kind of surface tension, that’s why XYAA works even on DLP, I imagine it like welding where there is a hot area that you lead about by adding energy.

There is a lot here but relevant to this is just the fact that on consumer lcd printers, especially old ones like ine, bloom is king, without it things just don’t cure.

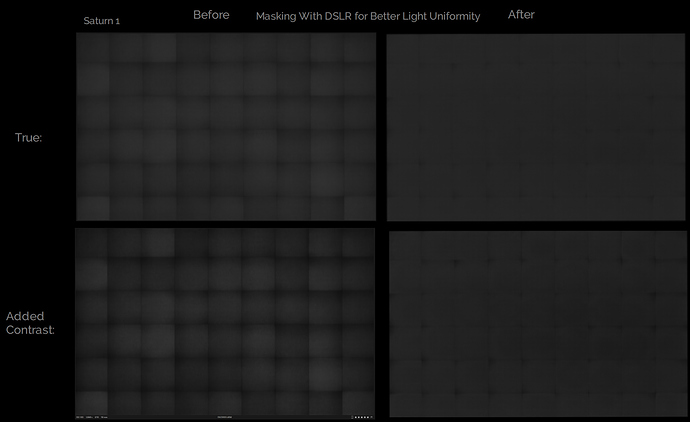

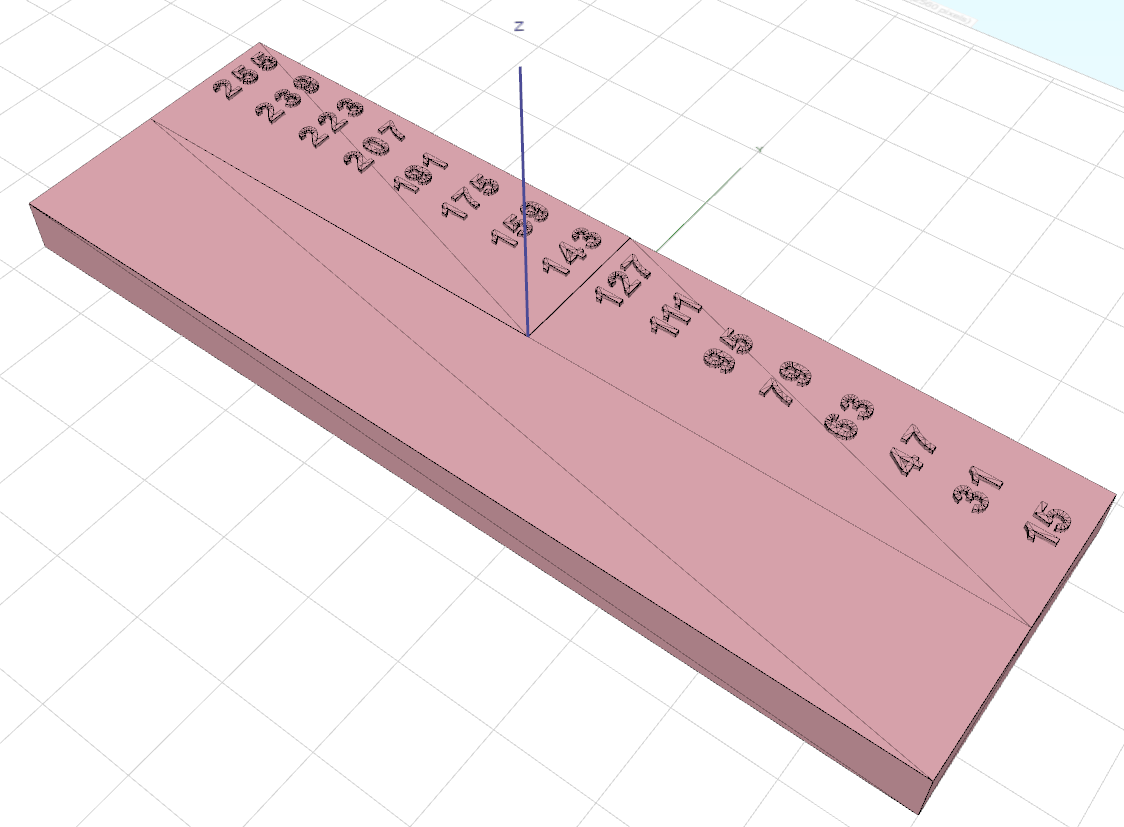

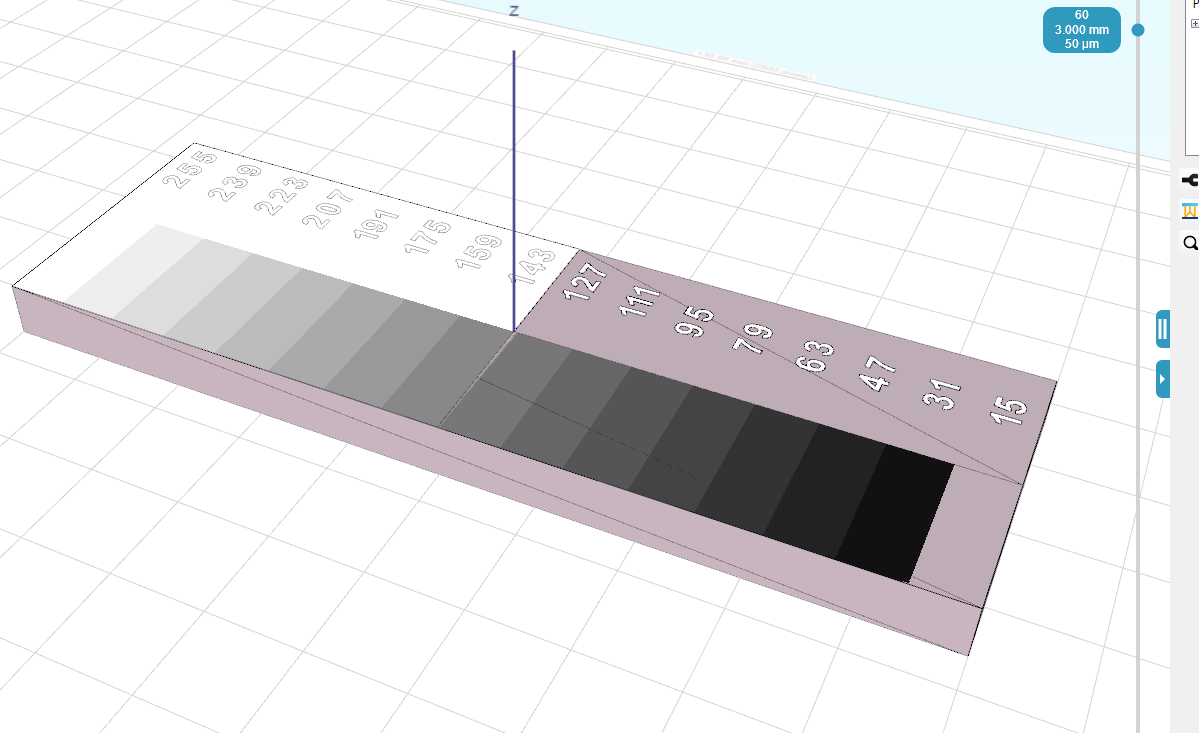

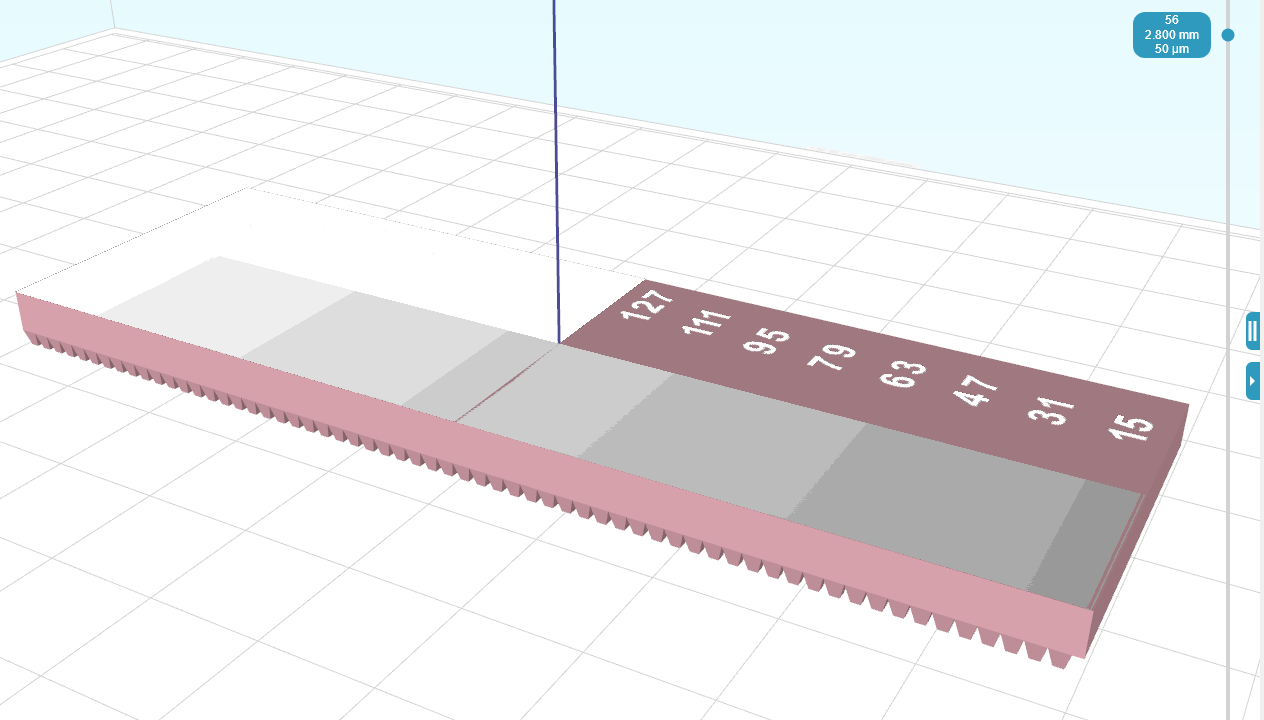

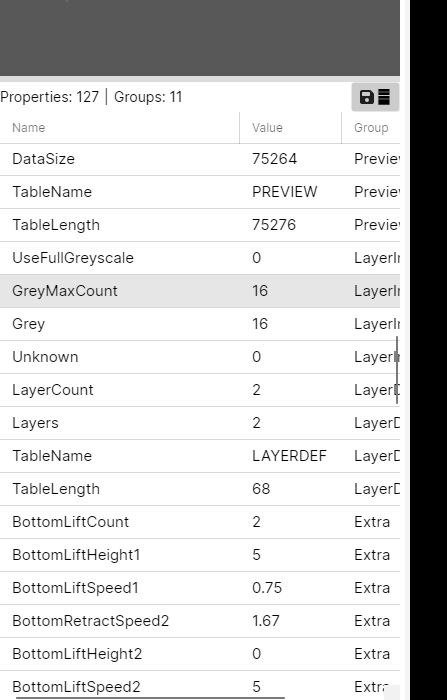

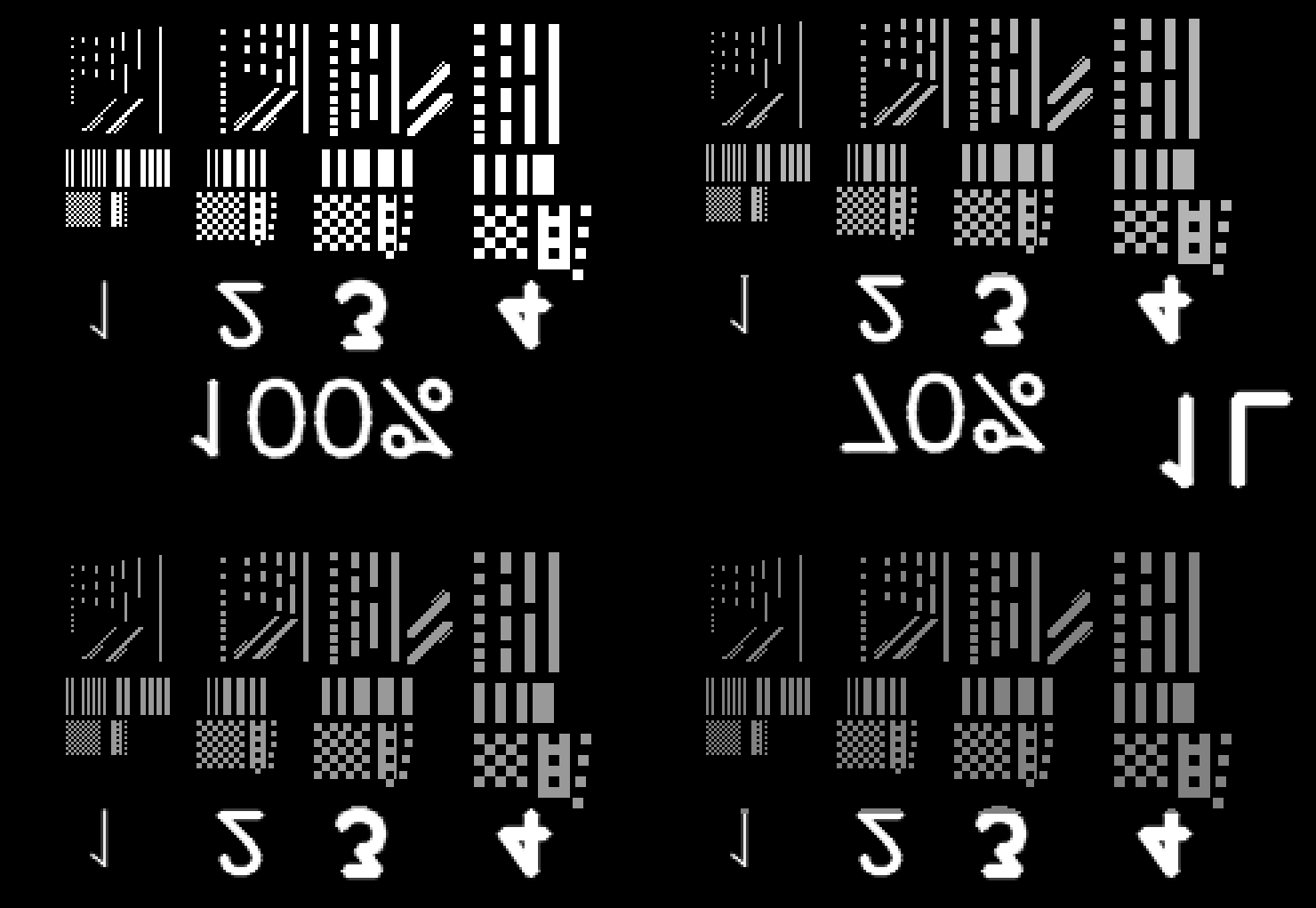

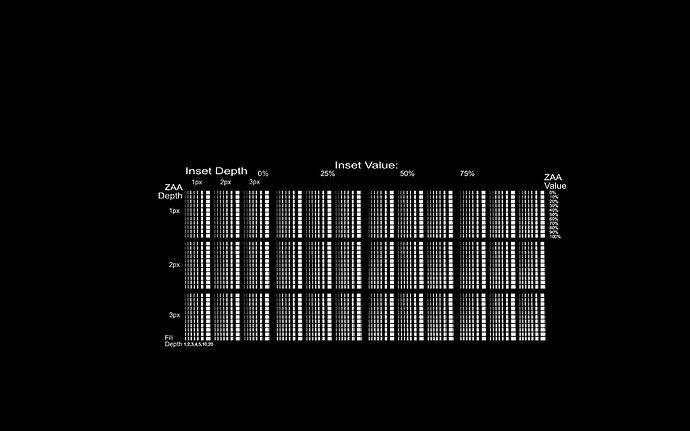

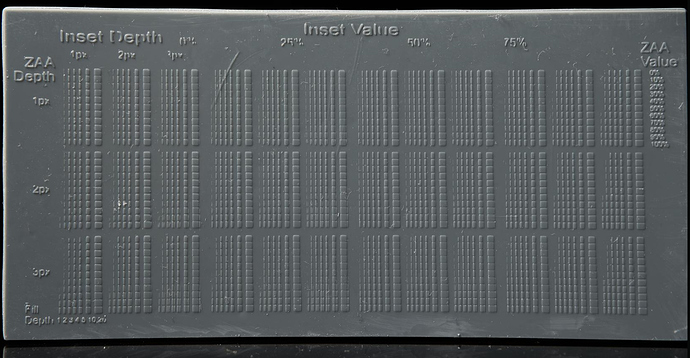

I then made a test with 2520 tests (this is a screenshot not the actual image)

to test how small I could get vertical gradients to form by using gaps to reduce bloom just enough

I did get some very thin 2px gradients with this but when I made the slicer do this it didn’t help, I haven’t tried very hard to get it to work so I might try again later but the thing is, while I really struggle to get pigmented resins to do VAA at less shallow angles in this video Dennys just magically got such angles smooth with normal chitu blur AA https://www.youtube.com/watch?v=cGAgyRVK32g&ab_channel=dennyswang

I need to get more info from him about this but I think it might have worked for him because he has a higher res printer with more parallel light than me, or perhaps it just worked because the high blur had no grey offset, and while grey offset is good for large layer perhaps its too much for steep layers / thin gradients because the high bloom from these layers which I tried to avoid with the techniques above could just be avoided with a lower grey offset, before the tests above I did try using a normal mask to dim the lower grey offset a bit to compensate for this but I didn’t take it down to no grey offset, I just made it slightly darker so perhaps I will try again at some point with a bigger dip.

Also back to how I’m overlapping layers, it’s kind of cheating, it would be ideal to do VAA without it but it helps so much, eg with 4x overlapping layers thin gradients are 4x wider, and it doesn’t matter if the grey offset isn’t perfect because too high and well the lowest value is being projected at an area 3 layer heights away so it won’t cure anyway and too low and well, again well that layer will still overlap the previous ones good enough. (when my tests say 4L or 1L that’s the layer overlap) Now while it’s more forgiving it can still be a problem, you don’t want to have the exposure too low when doing this because then it can cause flaky partially cured layers

eg here overlapping layers with too low grey offset vs no VAA

(and above I posed good grey offset with overlapping VAA layers on this model)

I posted this image before, this is with 1L to show how lower grey offset affects that, too low and you don’t cover the previous layer too high and there is still a layer edge which is more visible than it needs to be. so it’s very hard to get this perfect, without perfect bloom compensation it’s actually impossible because the correct grey offset won’t be the same on a large gradient vs a thin one vs a long thin one etc. and back to the Dennys findings, he just used blur from chitu, that blur will make a small overlapping gradient on every layer until the gradient gets so shallow that they don’t overlap, that’s where my slicer does better, so if even that overlaps, why not overlap VAA? I get that 4L might not be perfectly accurate, and this is where my techniques differe from the ember team, I want visual quality so it doesn’t need to be technically perfect as 1L is, however, if you want that 1L technical accuracy then you should still overlap by a small amount, my slicer can do any overlap, eg I can just type in 1.1 or 1.5L for an object and it will work. Also if you only have 4 bit anycubics with a usable range of like 7 steps then getting the perfect grey offset will be harder. Do you not have any other printers?

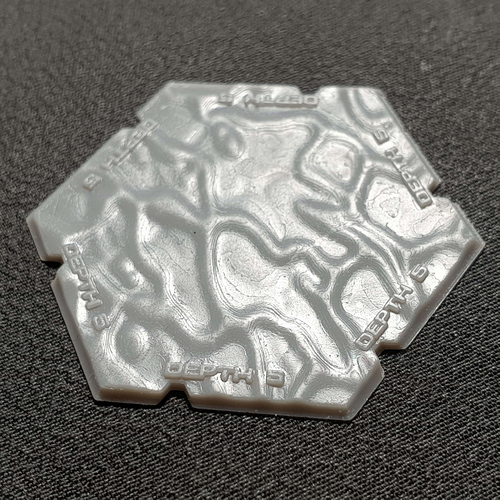

also here is a file I sliced for a friend with an anycubic printer, unfortunately there isn’t a non vaa one besides to compare but I have a pic from someone else who printed the same thing without VAA

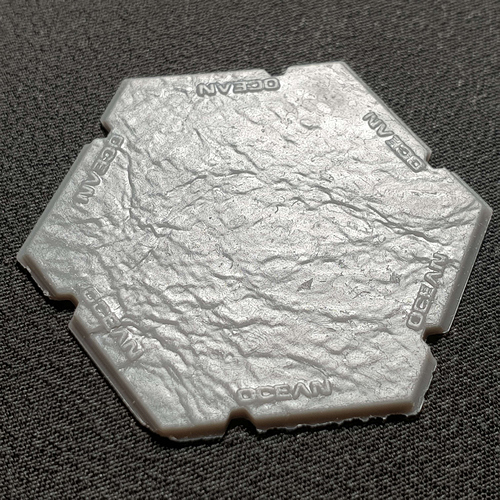

here are some of my prints of these bases